If you read, watch, or listen to anything these days, you cannot avoid the topic of artificial intelligence. It is everywhere. AI has become such a buzzword that “GPT” is rapidly turning into a verb.

Echoes of the Dot-Com Bubble

For those who remember the dot-com bubble, there are striking parallels. E-commerce eventually took off, and web-based businesses flourished. The bubble coincided with the explosive growth of the World Wide Web, and although many ventures failed, the internet became foundational to modern life.

In much the same way, today’s AI boom builds upon decades of prior research—particularly in natural language processing and machine learning. If you are curious, look up ELIZA, a chatbot developed in the 1960s that mimicked a Rogerian psychotherapist. It remains a fascinating early example of conversational AI and a reminder that today’s “new” ideas often have deep roots.

A Personal Journey Through Technology

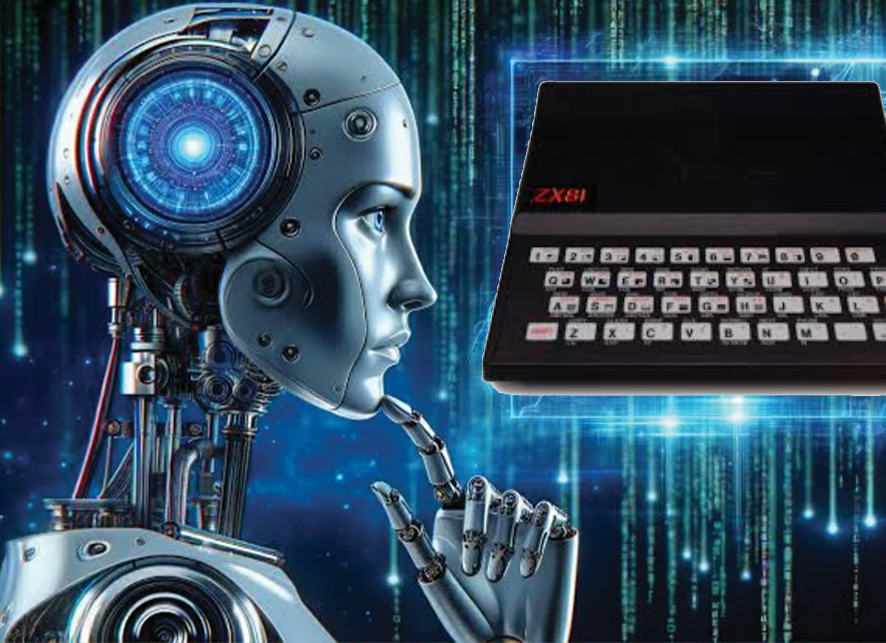

My own journey with technology began when I received a ZX81 for Christmas—a small black computer that set me on a lifelong path in computing. Alongside that fascination, I have always enjoyed science fiction, especially the works of Isaac Asimov. His stories often sparked internal debates about the future of machines and intelligence.

Years later, at university—and, admittedly, sometimes in the pub—my peers and I would debate ideas such as machine learning . We would imagine what these systems might one day achieve, but we were constrained by the following shared belief: unless a computer could be 100 per cent accurate, 100 per cent of the time, it could never truly “take over.”

In that blissful ignorance, we carried on with our studies, confident that the machines were not coming for our jobs.

Back then, it all felt theoretical—a topic for essays and late-night conversations rather than real-world change. But as the web matured, those ideas began to shift from science fiction to something that felt increasingly tangible.

The Web Changed Everything

Then came the web. Access to vast, varied datasets, powerful processing infrastructure and scalable storage—together with improved training techniques—meaning that ideas once confined to research labs became practical tools. These three ingredients—data, processing, and algorithms or training methods—are now widely regarded as the foundations of modern AI.

This combination has unlocked extraordinary potential. Systems that once struggled to recognise a photograph can now analyse medical scans, generate complex designs, or help people communicate across languages. The question is no longer whether AI can match human performance, but how best to combine human insight with machine efficiency.

Accountability and the Black Box

Despite the excitement, AI is not a silver bullet. It brings with it serious questions about responsibility and transparency—issues that societies, businesses and governments are still learning to navigate.

Human beings expect accountability. When someone makes a mistake, we demand transparency and responsibility. We have already seen incidents involving autonomous vehicles, such as Cruise’s robotaxi, where regulatory investigations and permit suspensions followed a pedestrian injury. If a loved one were misdiagnosed by an AI system, would we accept the explanation? Would we trust that lessons had been learned?

These questions are not arguments against AI, but reminders that progress must be matched by ethics, safety, and trust.

So, Will AI Take Over?

AI now plays an ever-larger role in daily life. But rather than “taking over,” it is more accurate to say it is moving in—quietly embedding itself in how we live, work, and make decisions. Properly trained AI can outperform humans in many specific tasks, yet it also depends on human oversight, context, and creativity.

We will always need people to guide, question, and interpret what AI produces. The future will be less about human versus machine, and more about human with machine.

The Road Ahead

There is undoubtedly a place for AI—particularly in the low-hanging-fruit areas where significant efficiencies can be achieved. Such improvements merit serious consideration.

Recent surveys suggest that around 80 to 90 per cent of organisations now use AI in at least one part of their business (McKinsey, The State of AI 2025). The challenge is not adoption but thoughtful integration—how we use these tools responsibly, effectively, and with purpose.

AI will continue to shape industries, accelerate discovery, and redefine the way we work. But as we weave it into our systems, our businesses, and our lives, we must continue to ask: What are we willing to delegate—and what must remain human?

If we can keep asking that question, perhaps the real breakthrough will not be artificial intelligence—but the wisdom with which we use it.